Description :

Yoon Kyung Lee, a postdoctoral researcher at our lab, produced this output as a visiting researcher at the University of Texas at Austin in collaboration with researchers from Microsoft.

Large Language Models (LLMs) have demonstrated surprising performance on many tasks, including writing supportive messages that display empathy. Yoon Kyung had these models generate empathic messages in response to posts describing common life experiences, such as workplace situations, parenting, relationships, and other anxiety- and anger-eliciting situations.

Across two studies (N=192, 202), her team showed human raters a variety of responses written by several models (GPT4 Turbo, Llama2, and Mistral), and had people rate these responses on

how empathic they seemed to be. Her team found that LLM-generated responses were consistently rated as more empathic than human written responses. Linguistic analyses also show that these models write in distinct, predictable “styles”, in terms of their use of punctuation, emojis, and certain words. These results highlight the potential of using LLMs to enhance human peer support in contexts where empathy is important.

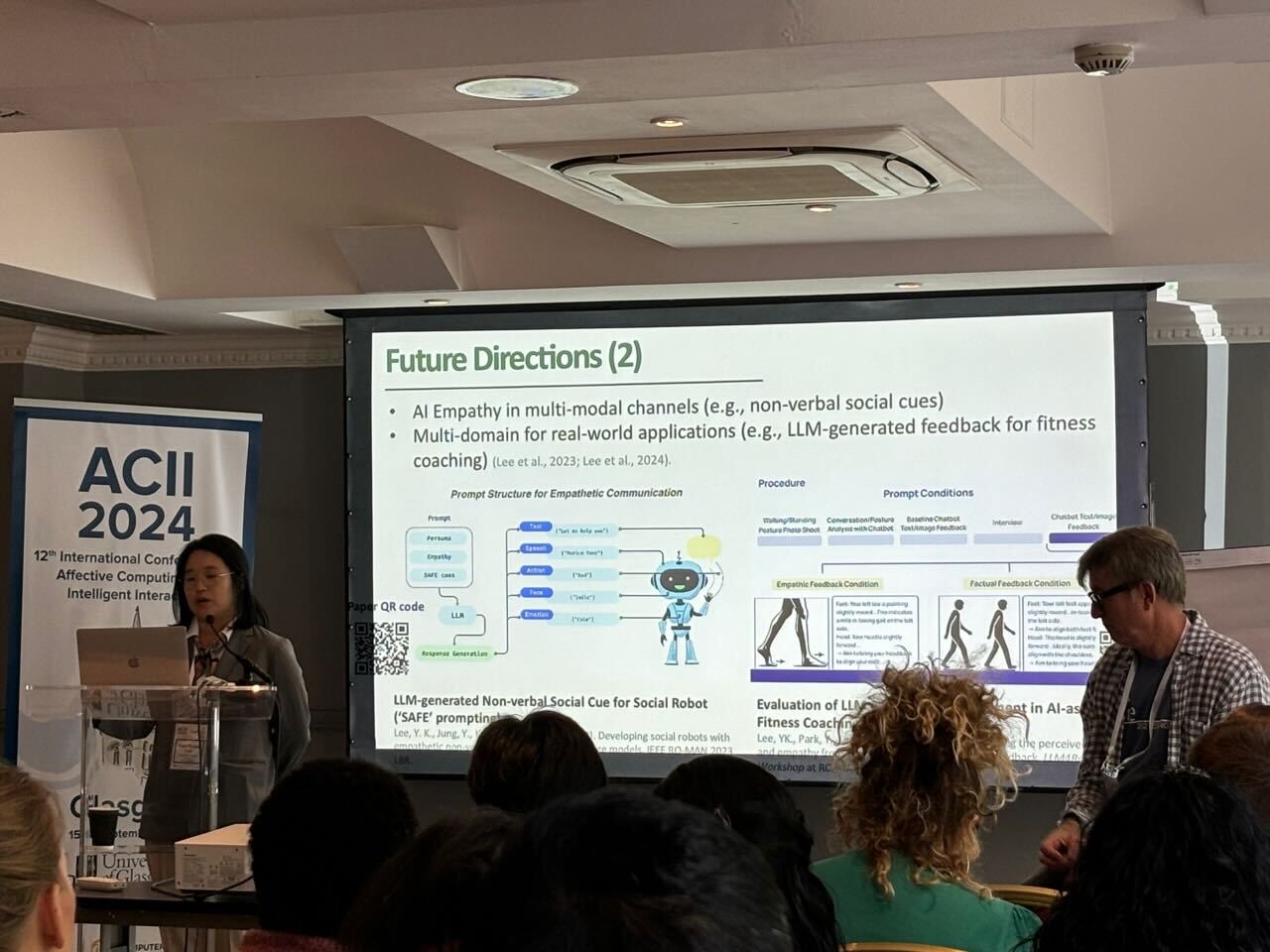

ACII 2024 homepage

paper