Description :

Large language models (LLMs) have offered new opportunities for emotional support, and recent work has shown that they can produce empathic responses to people in distress. However, long-term mental well-being requires emotional self-regulation, where a one-time empathic response falls short.

Yoon Kyung Lee, as a visiting researcher at the University of Texas at Austin in collaboration with researchers from Microsoft, explored whether LLMs could be guided to perform cognitive reappraisals, a psychological strategy aimed at reshaping the way individuals interpret negative experiences. Cognitive reappraisals are rooted in psychological principles and focus on altering the negative appraisals people make about challenging situations, which are often at the core of emotional distress.

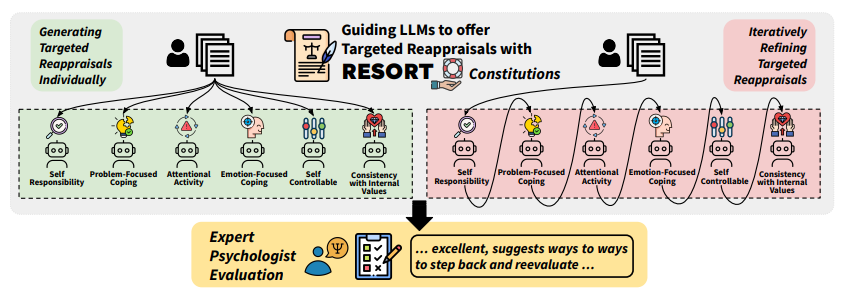

Her team hypothesized that psychologically grounded principles could enable such advanced psychology capabilities in LLMs, and designed RESORT, which consists of a series of reappraisal constitutions across multiple dimensions that LLMs be instructed with.

Her team conducted a pioneering evaluation in conjunction with clinical psychologists with M.S. or Ph.D. degrees of LLM’s zero-shot ability to generate cognitive reappraisal responses to medium-length social media messages seeking support. The results of the study showed that even LLMs at the 7B scale guided by RESORT are capable of generating emphatic responses that can help users reappraise their situations.

[Accepted Papers]

Large Language Models are Capable of Offering Cognitive Reappraisal, if Guided